Navegación basada en visión para apoyo en tareas agrícolas

Un robot autónomo móvil es una máquina capaz de extraer información de su ambiente y usar esa información y conocimiento para moverse en forma segura cumpliendo un propósito. La navegación es una de las actividades más importantes y desafiantes que debe tenerse en cuenta al trabajar con un robot autónomo móvil.

La navegación es una colección de algoritmos que permiten resolver las dificultades que aparecen al tratar de responder a las siguientes preguntas: a dónde debo ir, cuál es la mejor forma de llegar, dónde he estado (construcción de mapas) y dónde estoy (localización). Desarrollar esta colección de algoritmos no es una tarea simple debido a varios factores. Los más relevantes son los que tienen que ver con la incorporación de información sensorial con ruido al mapa y la otra es la fuerte relación entre localización y construcción de mapas. Este último aspecto se refiere al hecho que para construir mapas se debe estar localizado y para localizarse hay que tener un mapa.

En esta tesis se propone crear un sistema de navegación visual robusto que posibilite a un robot autónomo móvil para exteriores moverse de forma autónoma y precisa por el campo. En este sentido, se busca generar un robot extensible que sirva como base para realizar diferentes funciones, como ser aplicación automática de productos fitosanitarios, monitoreo del crecimiento de las plantas, entre otros casos de estudio en los que será evaluado el sistema desarrollado.

Tutores: Matias Di Martino, Gonzalo Tejera

Hardware

Robots

Durante la tesis se trabajará con los siguientes robots:

IkusIkus es un robót autónomo móvil para investigación, creado por el grupo MINA de la Facultad de Ingeniería - UDeLaR. Es una plataforma móvil y extensible, totalmente integrada a ROS. Cuenta con una JetsonTX2 de Nvidia como plataforma de cómputo.

JackalJackal es una plataforma robótica de investigación, para utilizar en exteriores. Tiene una computadora a bordo, GPS e IMU totalmente integrados con ROS.

Proyectos de SLAM visual

RTAB-Map (Real-Time Appearance-Based Mapping) is a RGB-D Graph SLAM approach based on a global Bayesian loop closure detector. The loop closure detector uses a bag-of-words approach to determinate how likely a new image comes from a previous location or a new location. When a loop closure hypothesis is accepted, a new constraint is added to the map's graph, then a graph optimizer minimizes the errors in the map. A memory management approach is used to limit the number of locations used for loop closure detection and graph optimization, so that real-time constraints on large-scale environnements are always respected. RTAB-Map can be used alone with a handheld Kinect or stereo camera for 6DoF RGB-D mapping, or on a robot equipped with a laser rangefinder for 3DoF mapping.

M. Labbé and F. Michaud, “RTAB-Map as an Open-Source Lidar and Visual SLAM Library for Large-Scale and Long-Term Online Operation,” in Journal of Field Robotics, vol. 36, no. 2, pp. 416–446, 2019.

Código disponible - ROS

ORB-SLAM2ORB-SLAM2 is a real-time SLAM library for Monocular, Stereo and RGB-D cameras that computes the camera trajectory and a sparse 3D reconstruction (in the stereo and RGB-D case with true scale). It is able to detect loops and relocalize the camera in real time. We provide examples to run the SLAM system in the KITTI dataset as stereo or monocular, in the TUM dataset as RGB-D or monocular, and in the EuRoC dataset as stereo or monocular. We also provide a ROS node to process live monocular, stereo or RGB-D streams.

Mur-Artal, R., & Tardós, J. D. (2017). Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Transactions on Robotics, 33(5), 1255-1262.

Código disponible - ROS

Datasets

- The Rosario Dataset: Multisensor Data for Localization and Mapping in Agricultural Environments

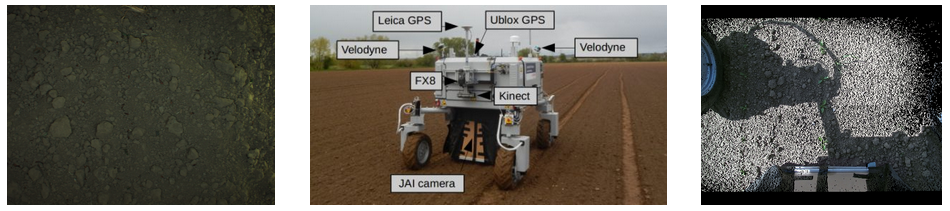

Agricultural dataset collected on-board out weed removing robot. The dataset is composed by six different sequences in a soybean field and it contains stereo images, IMU measurements, wheel odometry and GPS-RTK (position ground-truth).

Taihú Pire, Martín Mujica, Javier Civera and Ernesto Kofman. The Rosario Dataset: Multisensor Data for Localization and Mapping in Agricultural Environments. In: International Journal of Research Robotics, 2019

ZED Stereo Camera

- The 2016 Sugar Beets Dataset Recorded at Campus Klein Altendorf in Bonn, Germany

Agricultural dataset collected on-board out weed removing robot. The dataset is composed by six different sequences in a soybean field and it contains stereo images, IMU measurements, wheel odometry and GPS-RTK (position ground-truth).

Chebrolu, N., Lottes, P., Schaefer, A., Winterhalter, W., Burgard, W., & Stachniss, C. (2017). Agricultural robot dataset for plant classification, localization and mapping on sugar beet fields. The International Journal of Robotics Research, 36(10), 1045-1052.

Kinect, Jai camera

- The Visual-Inertial Canoe Dataset

We present a dataset collected from a canoe along the Sangamon River in Illinois. The canoe was equipped with a stereo camera, an IMU, and a GPS device, which provide visual data suitable for stereo or monocular applications, inertial measurements, and position data for ground truth. We recorded a canoe trip up and down the river for 44 minutes covering 2.7 km round trip. The dataset adds to those previously recorded in unstructured environments and is unique in that it is recorded on a river, which provides its own set of challenges and constraints

Miller, Martin; Chung, Soon-Jo; Hutchinson, Seth (2017): The Visual-Inertial Canoe Dataset. University of Illinois at Urbana-Champaign.

Stereo camera, an IMU, and a GPS device

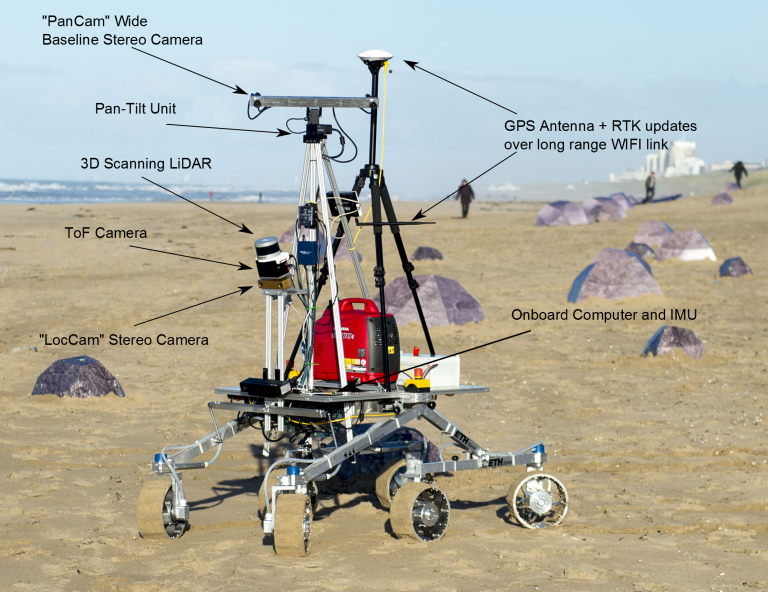

- Katwijk Beach Planetary Rover Dataset

This data collection corresponds to a field test performed in the beach area of Katwijk in The Netherlands (52°12’N 4°24’E). Two separate test runs were performed and the data has been divided for these two runs. The first, which consists of a ~2km-long traverse, focuses on global localisation by matching correspondences between features detectable from “orbital” (aerial) images and those seen by the rover during its traverse. The second run is a shorter traverse focusing on enahnced visual odometry with LiDAR sensing. Both tests data consist of rover proprioceptive (wheel odometry, IMU) and exteroceptive sensing (Stereo LocCam, Stereo PanCam, ToF Cameras and LiDAR) plus DGPS groundtruth data and the ortomosaic and DEM maps generated from aerial images taken by a drone. The data should be suitable for activities in the research areas of global and relative localisation, SLAM or subtopics of those, in Global Navigation Satellite System (GNSS)-denied environments, especially with respect to planetary rovers or similar operational scenarios.

The rover development and setup to run this dataset field test campaign was lead by Robert A. Hewitt and Evangelos Boukas, both PhD candidates through the Networking Partnering Initiative (NPI) programme of ESA and worked at the Robotics Section (TEC-MMA) of ESTEC throughout the year 2015

- Kagaru Airborne Stereo Dataset Dataset

The Kagaru Airborne Dataset is a vision dataset gathered from a radio-controlled aircraft flown at Kagaru, Queensland, Australia on 31/08/2010. The data consists of visual data from a pair of downward facing cameras, translation and orientation information as a ground truth from an XSens Mti-g INS/GPS and additional information from a Haico HI-206 USB GPS. The dataset traverses over farmland and includes views of grass, an air-strip, roads, trees, ponds, parked aircraft and buildings. This dataset has been released for free and public use in testing and evaluating stereo visual odometry and visual SLAM algorithms.

Warren, M., McKinnon, D., He, H., Glover, A., Shiel, M., & Upcroft, B. (2014). Large scale monocular vision-only mapping from a fixed-wing sUAS. In Field and Service Robotics (pp. 495-509). Springer, Berlin, Heidelberg.

Aircraft

- Challenging data sets for point cloud registration algorithms

This group of datasets was recorded with the aim to test point cloud registration algorithms in specific environments and conditions. Special care is taken regarding the precision of the "ground truth" positions of the scanner, which is in the millimeter range, using a theodolite. Some examples of the recorded environments can be seen bellow.

F. Pomerleau, M. Liu, F. Colas, and R. Siegwart, Challenging data sets for point cloud registration algorithms, International Journal of Robotic Research, vol. 31, no. 14, pp. 1705–1711, Dec. 2012. bibtex, Publisher Link

Hokuyo UTM-30LX

- The KITTI Vision Benchmark Suite

A project of Karlsruhe Institute of Technology and Toyota Technological Institute at Chicago

The odometry benchmark consists of 22 stereo sequences, saved in loss less png format: We provide 11 sequences (00-10) with ground truth trajectories for training and 11 sequences (11-21) without ground truth for evaluation. For this benchmark you may provide results using monocular or stereo visual odometry, laser-based SLAM or algorithms that combine visual and LIDAR information. The only restriction we impose is that your method is fully automatic (e.g., no manual loop-closure tagging is allowed) and that the same parameter set is used for all sequences. A development kit provides details about the data format.

Microsoft Kinect sensor - Office environnements, hall.

- HRI Datasets

Honda Research Institute

Honda Research Institute datasets are introduced to enable research on traffic scene understanding, prediction, driver modeling, motion planning, and related research that are important for intelligent mobility.

Street/traffic - Car

- Oxford Robotcar Dataset

Oxford Robotics Institute

The Oxford RobotCar Dataset contains over 100 repetitions of a consistent route through Oxford, UK, captured over a period of over a year. The dataset captures many different combinations of weather, traffic and pedestrians, along with longer term changes such as construction and roadworks.

W. Maddern, G. Pascoe, C. Linegar and P. Newman, "1 Year, 1000km: The Oxford RobotCar Dataset", The International Journal of Robotics Research (IJRR), 2016.

Street/traffic - Car

- Otros:

- Argoverse: 3D Tracking and Forecasting with Rich Maps

- Y más..

- RGB-D SLAM Dataset and Benchmark

Computer Vision Group - TUM Department of Informatics - Technical University of Munich

Provide a large dataset containing RGB-D data and ground-truth data with the goal to establish a novel benchmark for the evaluation of visual odometry and visual SLAM systems. Dataset contains the color and depth images of a Microsoft Kinect sensor along the ground-truth trajectory of the sensor. The data was recorded at full frame rate (30 Hz) and sensor resolution (640x480). The ground-truth trajectory was obtained from a high-accuracy motion-capture system with eight high-speed tracking cameras (100 Hz). Further, we provide the accelerometer data from the Kinect. Finally, we propose an evaluation criterion for measuring the quality of the estimated camera trajectory of visual SLAM systems.

Microsoft Kinect sensor - Office environnements, hall.

- ADVIO: An Authentic Dataset for Visual-Inertial Odometry

Santiago Cortés · Arno Solin · Esa Rahtu · Juho Kannala

The lack of realistic and open benchmarking datasets for pedestrian visual-inertial odometry has made it hard to pinpoint differences in published methods. Existing datasets either lack a full six degree-of-freedom ground-truth or are limited to small spaces with optical tracking systems. We take advantage of advances in pure inertial navigation, and develop a set of versatile and challenging real-world computer vision benchmark sets for visual-inertial odometry. For this purpose, we have built a test rig equipped with an iPhone, a Google Pixel Android phone, and a Google Tango device. We provide a wide range of raw sensor data that is accessible on almost any modern-day smartphone together with a high-quality ground-truth track. We also compare resulting visual-inertial tracks from Google Tango, ARCore, and Apple ARKit with two recent methods published in academic forums. The data sets cover both indoor and outdoor cases, with stairs, escalators, elevators, office environments, a shopping mall, and metro station.

Pedestrian - Phone - Indoor and outdoor cases, with stairs, escalators, elevators, office environments, a shopping mall, and metro station.

Pruebas realizadas

En octubre del 2021 realizamos la primer visita al INIA con el robot Jackal adaptado con la nueva plataforma de computo, cámaras y GPS.

Ejemplo de una corrida y su nube de puntos

Recorridas 2022

Enlaces de Interes

MINAWeb del grupo de investigación Network Management / Artificial Intelligence, el cual se desempeña en dos grandes áreas de trabajo: la gestión/control de redes de computadores y la inteligencia artificial aplicada a la robótica móvil.

Sumo.UYWeb del evento del Evento Sumo.UY

Proyecto ButiáWeb del proyecto Butiá